In this blog we will take a look at Network ATC, what it is and how it can help us when deploying Azure Stack HCI Clusters and keeping them working to their optimal capability.

What is Network ATC?

Network ATC provides an intent-based approach to host network deployment and configuration. It is aimed at simplifying and automating the network configuration process. Network ATC aims to reduce the complexity and potential for errors in network setup by automatically configuring network settings according to best practices.

The benefits of Network ATC include:

- Reducing host networking deployment time, complexity, and errors

- Deploy the latest Microsoft validated and supported best practices

- Ensure configuration consistency across the cluster

- Eliminate configuration drift

How does it work?

When utilising Network ATC you create ‘Intents’ based on the networking configuration of your cluster and nodes using PowerShell. Intents are definitions of how you intend to use the physical adapters in your system. An intent has a friendly name, identifies one or more physical adapters, and includes one or more intent types. An example is a management_compute Intent where the management and compute traffic flows over certain adaptors in your systems, i.e. domain comms, monitoring, remote management, RDP and the virtual machine [compute] traffic. This intent ensures the adapters being used on your systems are consistent across the clusters and deployed to the best practices.

How are Intents used in Azure Stack HCI?

When deploying Azure Stack HCI Cluster (23H2 and above) you choose a networking pattern that matches the design of your cluster. Example of networking patterns are:

- Group all traffic

- Group management and compute traffic

- Group compute and storage traffic

- Group management and compute (no storage)

Depending on which networking pattern you choose, net intents are created to adhere to the design of your cluster.

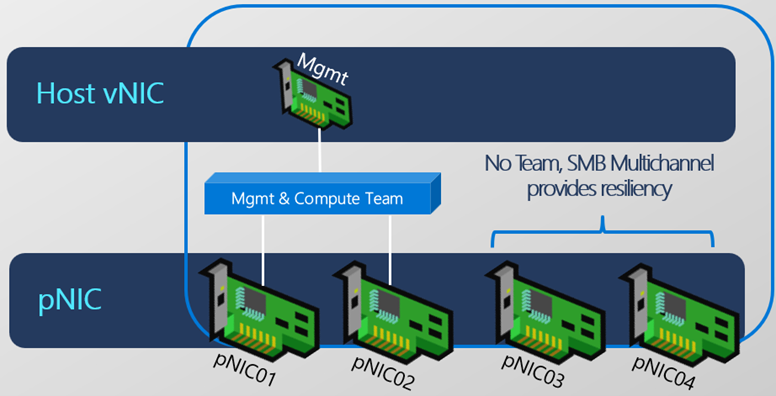

For example, when selecting to group management and compute traffic two net intents will be created, one for management and compute traffic and the other for storage traffic. The management and compute intent means that the host management network traffic (e.g. domain comms, monitoring, remote management, RDP) and the virtual machine [compute] traffic will flow over the same Interfaces and NIC. A virtual switch will be created with based on a switch embedded team (SET), and a ‘management os’ virtual network adapter will be create that gets the host management IP Address assigned. A storage intent will be created and applied to separate dedicated interfaces.

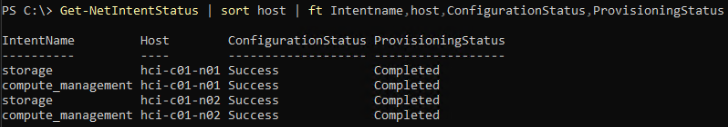

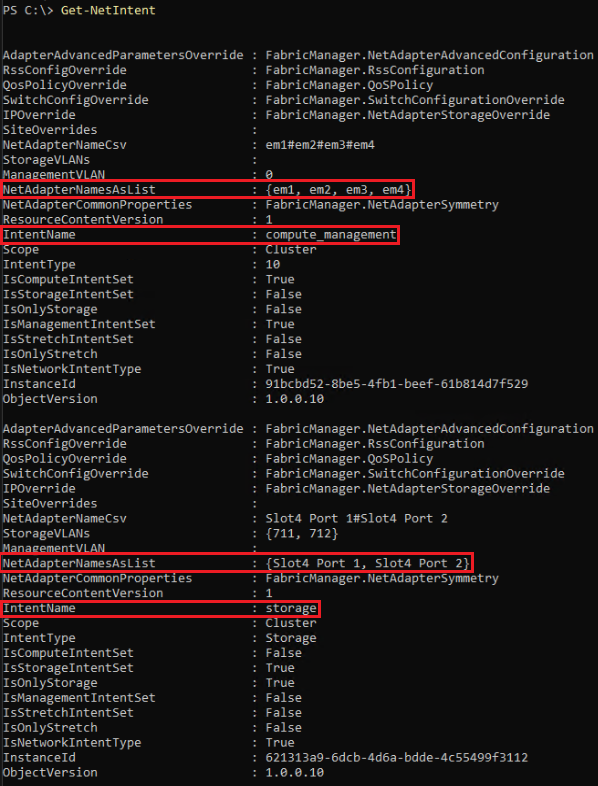

Below are examples of what these intent configurations look like on the HCI nodes.

As you can see they relate to different Adapters.

Creating Intents

You can manually create Intents and the prerequisites to using Network ATC are to install required features on all the cluster nodes:

- Network ATC

- Network HUD

- Hyper-V

- Failover Clustering

- Data Center Bridging

Install-WindowsFeature -Name NetworkATC, NetworkHUD, Hyper-V, 'Failover-Clustering', 'Data-Center-Bridging' -IncludeManagementToolsFor ATC to work, it is best practice to ensure each adapter is in the same PCI slot(s) in each host.

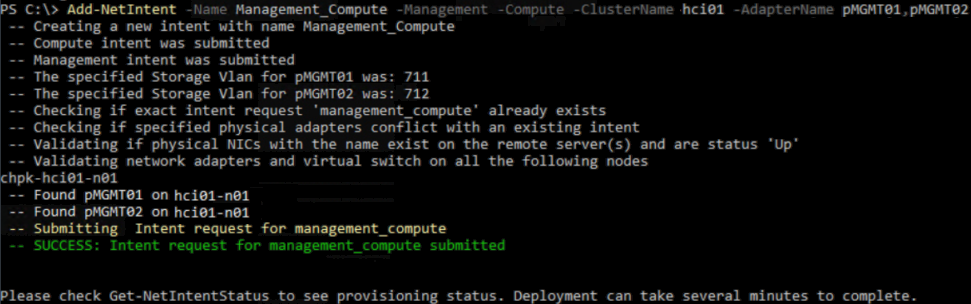

Please see the below example of creating an Intent for compute and management:

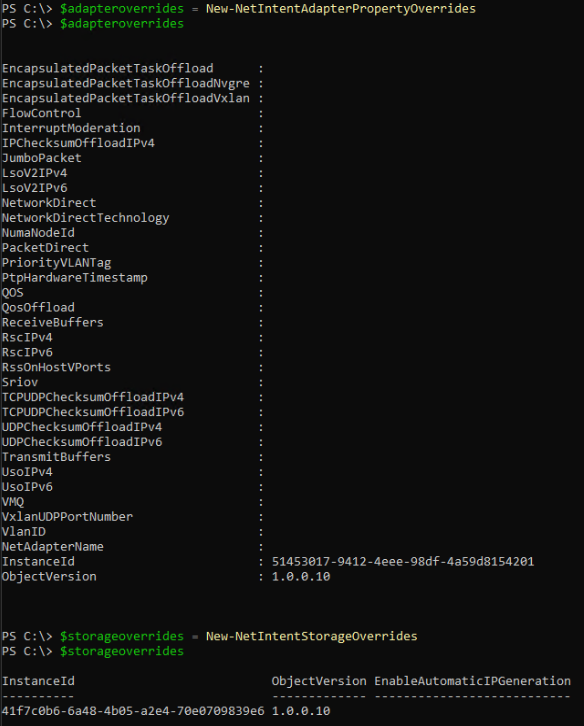

$adapteroverrides = New-NetIntentAdapterPropertyOverrides

$adapteroverrides.NetworkDirect = $false

$adapteroverrides.JumboPacket = "9014"

Add-NetIntent -Name Management_Compute -Management -Compute -AdapterName pMGMT01, pMGMT02 -ManagementVlan 31 -AdapterPropertyOverrides $adapteroverridesPlease see the below example of creating an Intent for atorage:

$storageoverrides = New-NetIntentStorageOverrides

$storageoverrides.EnableAutomaticIPGeneration = $false

$adapteroverridesstorage = New-NetIntentAdapterPropertyOverrides

$adapteroverridesstorage.JumboPacket = "9014"

Add-NetIntent -Name Storage -Storage -AdapterName pSMB01,pSMB02 -StorageVlans 711,712 -StorageOverrides $storageoverrides -AdapterPropertyOverrides $adapteroverridesstorageThe output of running the ‘Add-NetIntent’ command shows the checks and validation and if the intent was submitted correctly, as per the below example:

Overrides

In the above two example, you’ll noticed a couple of additional parameters used: ‘$adapteroverrides’, ‘$storageoverrides’. When adding Intents you can specify certain overrides to make adjustments from the default configuration the intents assume. For example, you can configure if RDMA is enabled / disabled, the MTU size and buffer sizes. For the storage overrides, the only option here is to disable the automatic generation of IP Addresses for the storage adapters. Please see the below example outputs of the available override settings:

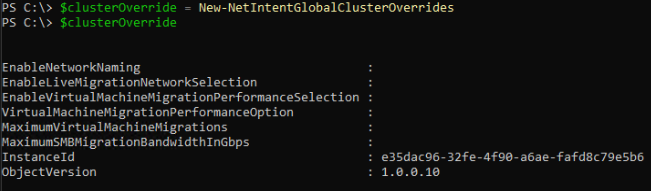

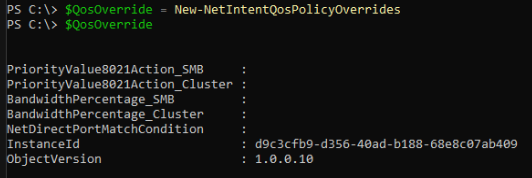

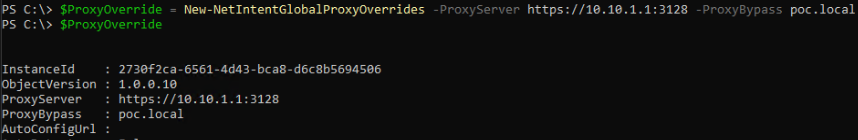

As well as the adapter and storage overrides, there are cluster, qos and proxy overrides that can be set to, as per the below screen shots:

Viewing Intents

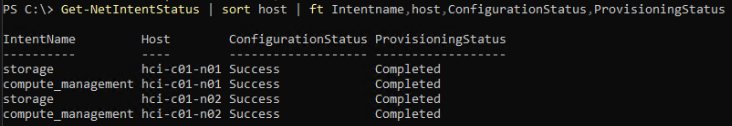

You can view the status of intents using the following command:

Get-NetIntentStatus | sort host | ft Intentname,host,ConfigurationStatus,ProvisioningStatus

Conclusion

Network ATC ensures that the deployed configuration stays the same across all cluster nodes. ATC will regularly validate the configuration and remediate and unplanned changes, for example if the MTU was changed outside of the intent for an adapter, ATC would change it back the specified value.

I think it’s a great technology and fundamental to network configuration for the latest version of Azure Stack HCI.

Network ATC was introduced in Azure Stack HCI version 21H2 and became mature in 22H2. If you are not using Network ATC now then please note that in version 23H2 and above, ATC Intents will become mandatory and so I recommend you become familiar with them. The Cloud Deployment for 23H2 will configure the Intents for you, based on the networking pattern and design of you clusters, but it’s always a good thing to understand how they work and especially if you need to change the default settings using the overrides.

Will network atc recreate a set team if it is deleted on one member in the azure local cluster?

Hi David, yes it should be recreated. I tested this in my lab and when removing the VMSwitch from one of the nodes, I saw it disappear from the cluster networks, then saw the Intent re-validate and the VMSwitch got recreated. Note that in my case, when the VMSwtich was removed, it fell back to the physical mgmt adapter that the mgmt IP was originally assigned to so it may have still have mgmt comms. If you are able to test in your environment let me know what you see?