In September’s Cumulative Update (KB5017316), which was released on 13th September 2022, Microsoft included some new Cluster Parameters related to Live Migration. This is an important change you should be aware of as, by default, it’ll set the number of concurrent live migrations to 1 and set a bandwidth limit for live migration to 25% of the total SMB / RDMA Bandwidth

What are the new settings?

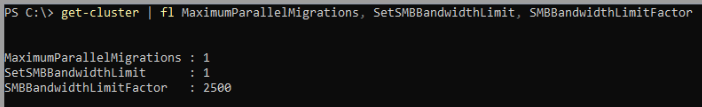

The new Cluster Properties are:

MaximumParallelMigrations – sets the number of parallel live migrations between nodes

SetSMBBandwidthLimit – turns the limit on or off

SMBBandwidthLimitFactor – sets the bandwidth limit for live migration

What the values mean:

MaximumParallelMigrations: 1 = 1 concurrent live migration at a time, 2 = 2 live migrations at a time, etc. Default = 1

SetSMBBandwidthLimit: 0 = disable, 1 = enable. Default 1

SMBBandwidthLimitFactor: 0 – 10,000. Default 2500. Divide this by 100 and you get the Percentage reservation calculation. So 2500 = 25%

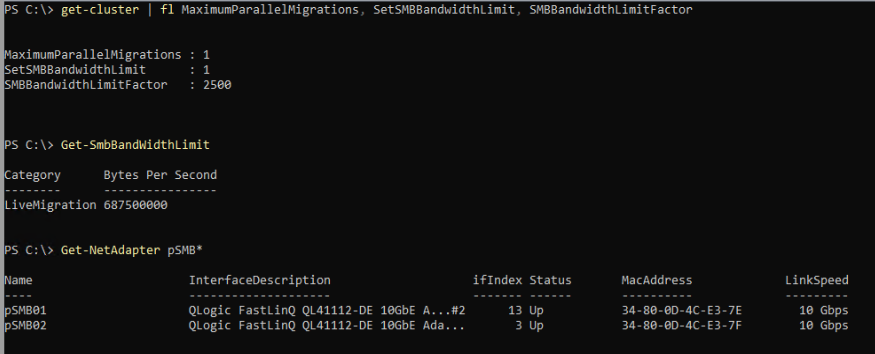

You can view these settings on your cluster by running the following command:get-cluster | fl MaximumParallelMigrations, SetSMBBandwidthLimit, SMBBandwidthLimitFactor

Why was this change made?

To protect clusters and cluster nodes from lost communication due to live migrations taking all the SMB bandwidth.

The cluster wide SMB bandwidth limit and factor are to control automatically setting an SMB bandwidth limit for cluster traffic. The default will reserve 25% of the SMB bandwidth between nodes for the cluster, so live migrations traffic doesn’t saturate the storage / SMB network and cause instability.

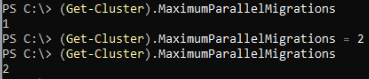

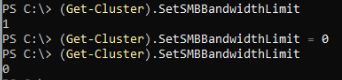

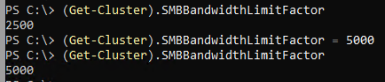

Changing the Defaults

If you are confident your cluster(s) can handle more parallel live migrations and / or a higher bandwidth limit than the deafults, then you can adjust these new parameters as per the below examples:

Set the concurrent live migrations to 2:(Get-Cluster).MaximumParallelMigrations = 2

Turn off the bandwidth limit:(Get-Cluster).SetSMBBandwidthLimit = 0

Set the bandwidth limit percentage: to 50%(Get-Cluster).SMBBandwidthLimitFactor = 5000

Bandwidth Limits

For bandwidth limits to work, which has always been the case, you’ll need the role installing on all the nodes: FS-SMBBW

You’ll also need to set the Live Migration performance option to SMB on each node:Set-VMHost -VirtualMachineMigrationPerformanceOption SMB

Since the new cluster bandwidth limit parameter is a factor (SMBBandwidthLimitFactor) then it calculates a value in Bytes Per Second for the set percentage of the overall SMB Bandwidth available (usually your Storage RDMA Network) and uses that value to set the SmbBandWithLimit.

The below example shows you what this looks like with the default 25% on a Cluster with 2 x 10GbE Adapters:

As you can see the Bandwidth Limit is set to a value of 687500000Bps which is 5.5Gbps. This is slightly more than 25% of the overall bandwidth, however, and I found that this additional ‘margin’ increases in relation to the Adapters having a higher link speed. In the above example we have a total of 20Gbps over the 2 x 10GbE Adapters and 25% of that is 5Gbps (or 625000000Bps). I guess this is because the value is based on a factor!?

Below are examples of what the bandwidth value is set to for 10, 40, and 100Gbps Adapters:

* 4 x is being used as the default is 25% so 4 x = 100%

** These calculations may be different in your clusters or be update by MS over time and just my observations

2 x 10Gb Adapters

687500000Bps = .69GBps = 5.5Gbps, 4 x 5.5 = 22Gbps

2 x 40Gb Adapters

2575812000Bps = 2.57GBps = 20.56Gbps, 4 x 20.56 = 82.24

2 x 100Gb Adapters

7812500000Bps = 7.8GBps = 62.4Gbps, 4 x 62.4 = 249.6Gbps

Conclusion

My opinion is that this is a positive change and one that arguably should have been made a long time ago. Being able to control live migration using cluster parameters is a lot easier than before where they had to be set on a per node basis and it ensures consistency. It should also reduce the number of issues with bandwidth exhaustion that get reported to Microsoft and a mean better customer experience.

The defaults of 1 x parallel live migration and a 25% bandwidth limit are sensible for most clusters, although might be a bit conservative for higher performance clusters and especially in a non-converged network design. If, for example, you have separate Storage Adapters running at a speed of 100Gbps then 2+ parallel migrations and a 50% bandwidth limit could be more optimal. With Switchless you also be able to increase from the defaults. The key is to test and compare sequential live migrations to multiple in parallel, observing the time it takes to migrate VMs and the bandwidth utilization – as ever please test yourself!!

I hope you found this blog useful and please check your clusters if you’ve recently patched them up the September CU!

P.S. this also applies to Windows Server HCI Clusters

Hello,

This is an awesome article. And gives a lot of insight.

I had some query with respect to my set up.

It is a 3 NIC server with each port of 25gig capacity.

For Storage, we are using via Switch and disaggregated model. (2ports for Management, 2 ports for Compute and 2 for Storage).

Teaming enabled on Management and Compute only. Storage uses storage network 1 and storage network 2.

Now, I have question on what is smbbandwidth limit I have to apply for this scenario?

We are using Network ATC. what should be the maximum smb bandwidth limit that I should set.

when I run this command Get-NetQosTrafficClass –Name SMB_Direct -priority 3 -BandwidthPercentage 50 -Algorithm ETS , I see that SMB_Traffic has 50% bandwidth. This is for the storage adapters alone.

so does this mean that, out of the 25GBPS per port, only 12.5 is available for the SMB traffic and considering the 25% suggestion above, should it be 25% of this 12.5 GB which amounts to 3.xx GB?

How to handle validate-dcb validation? I see its not passing with current values as I see in modal.units.test file that adapter link speed is set to 10*1000000000. I edited this to be 25*1000000000 and the percentage calculation to 50% in the formula there. When I set the values now to 4,5,6GB the test pass.

Added, I’m applying the smbbandwidth limit via the Globaloverrides. I can use decimal values like 6.25 Gb for example. only able to use whole number values like 2GB or 3GB.

Is there a way to apply decimal values for smbbandwidth limits and Any idea, what would be optimal solution for this scenario?

so math to understand the set up or calculation here will really be helpful.

Any help is greatly appreciated.

Hi Deepak, is this 22H2 or 23H2 Azure Local?

What is the ‘SetSMBBandwidthLimit’ value set to when you run “get-cluster | fl MaximumParallelMigrations, SetSMBBandwidthLimit, SMBBandwidthLimitFactor” ?

There’s two different things here, the bandwidth percentage in the SMB_Direct traffic class is a minimum guarantee and not a fixed allocation – it’s a reservation for QoS. If the SetSMBBandwidthLimit value is set to 1 then the SMBBandwidthLimitFactor comes into play and that will limit SMB bandwidth based on the value. So, under ‘nominal’ conditions, the SMB bandwidth won’t be limited by the QoS traffic class, and then additionally by the limit factor, but just by the limit factor.

As you have found, I don’t believe decimals are supported for the bandwidth value in the cluster override.

You are on the right track in testing the limit factor value for your environment. Most people find the defaults acceptable, if your tests show you can adjust this to be more optimal for you then great!